How Short Can We Go With Sub-Exposures in DSLRs?

Introduction

This is a discussion paper relating to a very common problem faced by the vast army of beginner astro-imagers who are not auto-guiding and have less than exemplary tracking mounts. I hope it will shed some light on a topic that we have all been fumbling with for some time. I use a series of 2-minute frames of the California Nebula for illustrative purposes.

Noise in Current (2006) Model Astro-Capable DSLRs

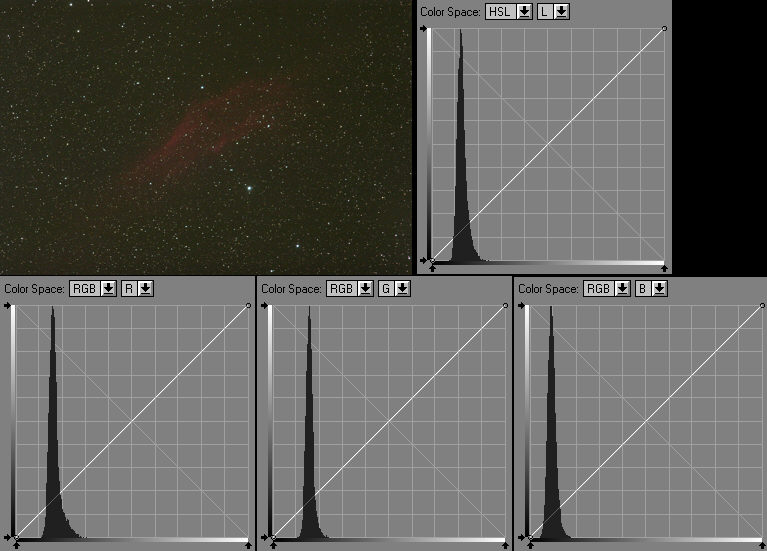

It is assumed that in our post-processing we take all the standard measures, using flats, darks and bias frames for calibration. The flats take care of dust motes and vignetting, the dark frames take care of the thermal signal and the bias frames take care of pattern-noise that may be present in all frames. I am not yet entirely convinced that bias frames make any difference to the final results, but since it takes so little effort to include them, I use bias calibration routinely. I also find that with less than pixel-perfect tracking (and when is it ever pixel perfect anyway?!) using "min-max excluded average" in stacking introduces a natural dithering that does a wonderful job of getting rid of all those hot/cold pixels. No need to worry about cooling the DSLR when the imaging is carried out under reasonable ambient temperatures, 25deg C or cooler. What we are looking for is a histogram of the skyfog that looks a bit like the one at top right in the picture below. Note the clear gap between the origin and the trailing edge of the skyfog mountain, here and also on the histogram at the back of the camera. The image on the LCD should look similar to the frame shown, obtained from Raw using nonLinear conversion; so basically we are examining a Luminance Histogram of a Jpeg capture, inclusive of a gamma stretch. Note that a Linear conversion (no gamma stretch) from Raw will be very much darker, with perhaps only a couple of stars showing up. We need pay no attention to that at all for purposes of estimating minimal exposure length. All we are really interested in is that there is a gap between the origin and the trailing edge of the skyfog mountain, and a gap on the back-of-camera histogram will also be a gap on the histogram of a file that has been obtained using Linear conversion from Raw.

The above frame was taken at a very dark, high altitude site, 2-minutes exposure at ISO1600 on a modified Canon 20D with a rectangular passband UV/IR Blocker, Canon 200mm lens + 1.4x Extender, yielding 280mm at f4. Notice that the skyfog is a chocolaty/toffee colour, which implies that R, G, and B histograms will differ. The B histogram is considerably closer to the origin than the R. Read-Noise in the current DSLRs is typically restricted to well under 1% of the histogram's horizontal axis, even at ISO 1600. In a thermally very noisy camera, an older DSLR or one that is being used at a high ambient temperature, there will be a trail of hot and warm pixels that link our skyfog mountain to the origin. Our aim is to yank that skyfog histogram well away from the Read-Noise by increasing the exposure length. If the camera is thermally very noisy, increasing the length of individual sub-exposures also increases the thermal signal and noise, and we simply become unable to attain that desired nirvana of having the skyfog statistics unadulterated in any major way by camera noises. We need to capture that skyfog mountain in a nice, clean fashion on a portion of the histogram that displays good linearity. Its width corresponds to the Poisson statistical noise of any photo-electric signal. The upper end (slope on the right side of the mountain) will also include the effect of bright stars or any bright regions of the fuzzy we are trying to image. We then stack a huge number of frames/subs, i.e. increase the integration time, to narrow this statistical mountain (that square root business) until we reach a stage that we can surgically subtract out the fog and we are left only with our precious image signal pertaining to our faint fuzzy. The aim, therefore is to keep our skyfog mountain unadulterated by any noise lurking at or near the origin of the histogram, in any sub-exposure, in any of the 3 R, G, B channels. Always keep a clear gap between the trailing edge of the skyfog mountain (the beginning of the slope on the left of the mountain) and the origin! This was simply unachievable in uncooled astroCCDs or the DSLRs of yesteryear. With the clear gap, we are said to be operating in a skyfog-statistics-limited (SSL) regime. In this regime, when we stack N frames each T-minutes long, we end up close to the SNR (Signal to Noise Ratio) of a single. long exposure of NT minutes. Longer sub-exposures may still help in improving the SNR in our final, stacked result slightly, but with asymptotically diminishing benefits. Ultimately the SNR for N*T can only equal the SNR of one NT exposure, and by being in the SSL regime we are attempting to get 90+% of the benefits with very much less attention to tracking accuracy, i.e. get most of the benefits of very long exposures without the tracking pains. How close can you let that skyfog mountain get to the origin? Do not get too greedy. Just because the back-of-camera histogram shows a gap does not imply that you do indeed have a gap in all the 3 RGB channels, especially if you use a logarithmic display for your histogram. So aim for a healthy gap (say, 10% of the X-axis on the back-of-camera histogram), but a zero gap implies that one or 2 of the RGB channels is probably not quite yet detached from the origin.

Stacking

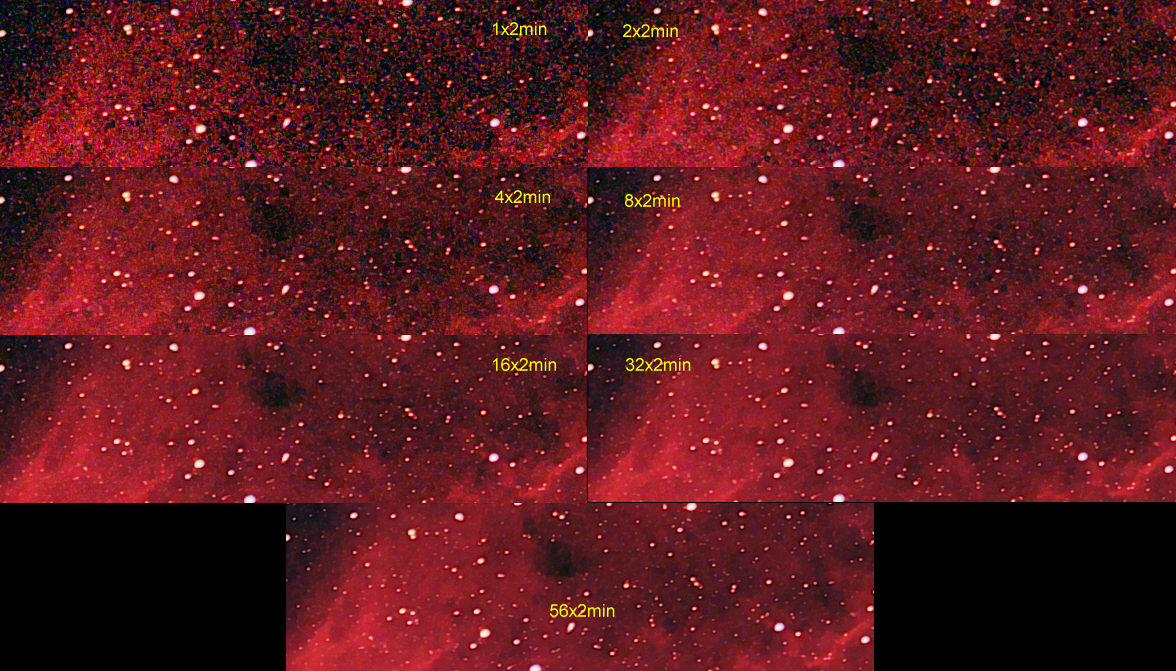

So, can such short exposures be stacked to yield reasonable images? Actually, yes. My imaging run delivered 56 frames each exposed for 2 minutes; the above being just typical of many. I calibrated the Linear-converted frames with bias, darks and flats, aligned them and then stacked them in batches of 2, 4, 8, 16, 32 and 56. Post processed the 32x2min batch in ImagesPlus to yield something that looked reasonable and then applied the process history to all the other stacks. Here's what I got, same small crop displayed at 1:1

I don't know about you, but I am rather fascinated by the noise going down in such deliberate steps. Each doubling of the number of frames brings its own very obvious reward, and even the full stack of 56 shows potential for further improvement. Naturally, when we present our final processed image we can hide a multitude of sins, be it slight miss-tracking or noise, by using a small display, but if you are chasing large prints you can never have enough integration time! Below is the full frame version for various integration times, at the very dark, high altitude site and in light polluted suburbia. An outer suburb of a large city would typically have skyfog that is 3 stellar Magnitudes brighter than the dark site used for the above frames. This means that the skyfog is 2.512*2.512*2.512 = 16 times brighter than at the above very dark site. Let us use 15x as a round number. The integration times required in suburbia (with 15x greater skyfog, 3 stellar Magnitudes) to achieve equivalent SNR are indicated below. The earlier 1:1 crops are from around the eye of the giant squid below.

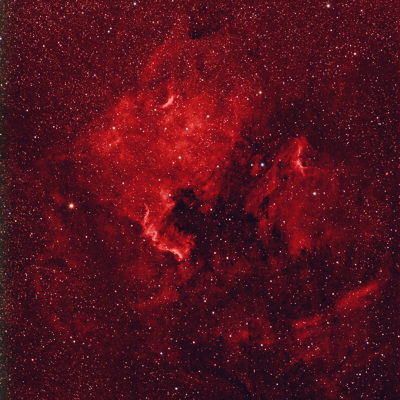

It's heartening to see that at this presentation size it is indeed possible to get quite a reasonable image with a few hours' integration time in light-polluted suburbia. For the mathematics behind the noise behavior you are referred here. Actually the situation is even more promising for H-alpha nebulae like the above. One can conveniently capture the nebulosity with pretty good SNR by using a narrowband H-alpha filter over a couple of hours, followed by a few frames to record the star colours imaged in white light. This is exactly what I did for the image below, taken at a Montreal suburb, yielding a SNR good enough for a large print:

Nevertheless it is quite obvious that the quality of our output when printed will almost always demonstrate a starvation in photons, even at only slightly light polluted sites. It really takes quite heroic efforts for those without a permanent set-up to achieve much over 3-hours integration time per image. So closing down a lens to deliver sharper stars is anathema. To visualize what closing down the aperture by a full f-stop does, f2.8 to f4, just compare the earlier noise swatches for, say, 16x2min and 8x2min. Sobering. That aperture setting needs very delicate handling and is a major reason why zooms are much less effective than primes for astrophotography.

What to do at a typical suburban light-polluted site using a lousy mount

We can shoot sub-exposures that are 15x shorter and still remain in the Skyfog-Statistics-Limited regime

We need to shoot 15x longer integration time to achieve the same SNR in the final, stacked and processed image

Since we found that 2-minute exposures at f4 were sufficient to put us in the SSL regime at the dark site, we expect that one-fifteenth of that will also be sufficient at the suburban site, 8-second exposures! I find that the most integration time I can practically achieve on a single target in one night is about 3-hours, allowing for set-up time and shooting the target while it is still near the meridian where the seeing is good. Three hours integration time means that we can hope to achieve a SNR in our final, stacked and processed image equivalent to 1/15th that integration time at a dark site, i.e. 180/15 = 12 minutes integration time at the dark site, somewhere between the 4x2min and 8x2min example noise crops shown earlier above. But with 8-sec subs one needs to stack 1350 frames to achieve 180 minutes integration time! Quite impractical, and very likely with so many frames being stacked we may find that the noise statistics do not behave entirely as our simplistic theory predicts. If we can track accurately for 2 minutes then our stack gets to a very manageable 90 frames. So we shoot 90x2min frames at a much lower ISO, say, ISO 200; or 180x1min frames at ISO 400. To retain dynamic range we try to keep the skyfog mountain to between 15% (recall the 10% minimum gap!) and one third of the X-axis on the back-of-camera histogram. You can easily improve your SNR a factor 2x or perhaps even better, with the same integration time, by using a Light Pollution Suppression filter. Do it! For what filters can do for you at a light polluted site, click this.

This leads us to conclude that a lousy mount that tracks precisely for less than a minute can be quite adequate at a light-polluted suburb provided we do not encumber it with an OTA having a very slow focal ratio. Even 8sec sub-exposures already put you in the SSL regime with an f4 camera lens. With an LPS filter we should be quite comfortably in the SSL regime using 20sec exposures with an f4 lens. With a typical small ED refractor working at f5 to f7.5 and an LPS filter we might still get away with 1-minute subs. But almost invariably we tend to under-estimate the integration time required for satisfactory SNR in the final stacked result. Measure your skyfog, here's how, look at the noise crops above and decide what you are willing to settle for in terms of integration time. Doing astrophotography at a suburban home will often imply having to compile your integration time over multiple nights, not a major hassle if you have a permanent set-up.

To go further: First, you need a modified DSLR that has ample sensitivity to H-alpha. Next, use a narrowband H-alpha filter, the narrower the better. This filters away much of your skyfog. You should then find that you need much longer sub-exposures to keep that 10% gap on the histogram, perhaps as much as 2-minutes at f4, but your SNR with a couple of hours integration time should be very nice on those red nebulae. The overall message for suburbia with a lousy mount is to use:

A modified DSLR

A fast OTA, e.g. a camera lens at f4 or f2.8; shorter focal lengths also improve tracking

An LPS filter for galaxies and reflection nebulae, narrowband filters for emission nebulae

Very, very ample integration times, implying hundreds of frames in a stack

If your tracking is good for a minute or longer at the shorter focal lengths you can benefit greatly from narrowband filters. I found that a 13nm Astronomik H-alpha filter can produce very satisfactory imaging in some suburbs using 2-minute subs at f4.5, but is too wide for my own home base. The Hutech H-alpha pop-in filter that goes in-between the mirror box and the lens is particularly attractive if you have a UV/IR Blocker over your sensor in a modified DSLR. This filter has a narrower bandwidth and should easily allow shooting at f2.8 or f3.5 with an appropriate lens. Unfortunately the light pollution in suburbia tends to have very strong gradients of different colours in different directions, e.g. a supermarket parking lot with mercury lighting on one side and sodium street lights on another side. Such multi-gradients are very difficult to handle when you use wide angle lenses and they tend to put a lower bound to the focal lengths you can use for your imaging with the wider-band filters. I find that focal lengths of 100mm to 400mm can work quite well. Too long and you run into tracking disabilities, too short and you run into those pesky sky gradients becoming too difficult to process out.

To Summarize

At any location, dark or light polluted, with or without filters, your minimum sub-exposure per frame needs to be long enough at ISO 1600 to see a gap of circa 10% of the X-axis on the back-of-camera histogram between the origin and the trailing edge of your skyfog mountain. You need your mount to be able to track that long with your chosen OTA. At a very dark site doing white light imaging (no narrow filters) you should find the following exposure times adequately long: 1min at f2.8, 2min at f4, 4min at f5.6, 8min at f8, 16min at f11. It is obvious therefore that the lousier your mount is, the more you need a fast OTA or lens. Decide what maximum length of exposure your mount can track precisely with your OTA of choice. Let us say, one minute. Shoot a one-minute frame at ISO 1600. Does that give you a nice gap, between 10% and 20% of the X-axis? If yes, go ahead and use one-minute frames. If there is no gap, then your ambitions are greater than your mount and OTA can satisfy. Try using a faster focal ratio OTA, e.g. a camera lens. If the gap is too large, thus eating into your upside dynamic range for brighter stars, then simply use a lower ISO. There is no over-whelming merit in using very long exposures just because you are able to track them, e.g. when autoguiding. When conditions are flexible and I am autoguiding I try to keep the stack of frames somewhere between 20 and a hundred for my desired integration time. All you are doing with unnecessarily long exposures is introducing risks of miss-tracking, field rotation, satellite tracks, planes, passing cars, etc for no very good reason. White-light imaging at a light-polluted site implies that you can get away with very short sub-exposures. A typical, darkish suburb would have 15x greater skyfog than a very dark site in a remote area. Indeed you can in theory use sub-exposures that are 15x shorter, but you also need 15x longer integration time. That's 225x more frames to stack! So practicalities mean that we cannot really use the very short exposures that are in theory allowable when chasing very dim fuzzies, but the very short exposures work fine when shooting the brighter objects, like star clusters, that do not need long integration times anyway.

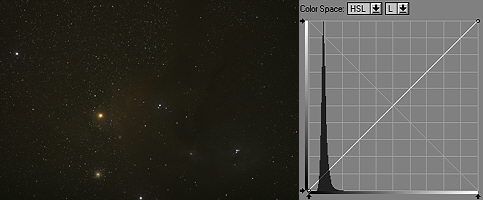

Anyway, for the skeptics, I searched my old images for some nebulae shot with one-minute subs, and found the Antares region imaged in Australia back in 2005. The mount was improperly balanced and I could not shoot longer than 60 seconds without the stars trailing. So here it is, 59x1min subs at f2.8, ISO 800, Canon 200mm lens, modified Canon 20D with a rectangular passband UV/IR blocker. These days I would prefer using ISO 1600 to get ever so slightly better quantization precision at these low histogram levels. A typical single frame as it would appear on the back-of-camera LCD, and its Luminance Histogram:

Rather unpromising; but check out what a stack of 59 delivers:

Conclusions

As long as we expose long enough for the entire skyfog mountain to be entirely detached from the camera's Read Noise we can be reasonably confident that stacking N exposures each T-minutes long will be closely equivalent to shooting one very long exposure that is NT-minutes long, yielding similar SNRs in the final processed images. The 2006 crop of Canon DSLRs do seem to have very low levels of Read Noise, all confined to well within one percent of the histogram's origin. Consequently all we need do is to expose long enough so that there is a clear gap between the origin and the trailing edge of the skyfog mountain on the histogram. A gap of, say, 10% of the X-axis, on the back-of-camera histogram assures us that the histograms in all the 3 R, G, and B channels have some gap and that in all the channels we are in the desired skyfog-statistics-limited regime. The longest minimal exposures are required at extremely dark sites, but even there one-minute at f2.8 is quite satisfactory. Somewhat paradoxically, in theory, we can get away with much shorter sub-exposures at light-polluted suburban sites, as little as 4 seconds at f2.8. But this does not help much because, at these sites, where sub-exposures can be 15x shorter, we also have to shoot a total integration time that is longer by the same factor, 15x, as the individual exposures may be shortened; quickly making the number of frames required in the stack absurdly large. I hope the above allows the reader to understand the interplay of these facets and to come up with his own recipe that matches his own mount's capabilities. Basically, things remain the same. With a mount that tracks poorly, use a short focal length, fast-focal-ratio lens or OTA (f2.8 is great!). The Canon 200mm/2.8L II lens is an excellent compromise OTA; fast, short-enough focal length for most mounts to track reasonably well, and enables very short sub-exposures. You can even autofocus it on any bright star or planet. With a really terrible mount (e.g. some of the cheaper fork-mounts) you may have to go for the Canon 100mm/2.0 or the 100mm/2.8macro USM. Nikon has similar offerings. Please note that you do NOT need a telescope to do great deep sky imaging. Just pick your targets appropriately. An LPS filter improves your output SNR noticeably, even for bluish reflection nebulae and galaxies, but for a major jump on red emission nebulae you need a modified DSLR that has ample sensitivity to H-alpha and to use it with the narrowest H-alpha filter you can lay your hands on.

What if even the above quoted minimal-length subs are too long for your mount and OTA? E.g. because you have a very slow f10 SCT on a wobbly mount and you are already bored with lunar/planetary imaging? Do not despair! Start attacking star clusters rather than nebulae and galaxies. Yes, you can get away with subs as short as a few seconds at f10. Examples at: Really short subs at slow focal ratios

If in your own investigations and for other reasons you find great merit in extending sub-exposures well beyond the minimum required to put you in the skyfog-statistics-limited regime (10% gap at ISO 1600) then I would love to hear of your conclusions. Do drop me an email.

Some Relevant References

Maths behind Skyfog-Statistics Limited regime

What filters can do for you at a light polluted site

Hutech modified DSLRs and pop-in Front Filters (between mirror box and lens)

Narrowband filters by Astronomik, Baader (also via Alpine Astronomical), Astrodon, Custom Scientific

For more stuff return to Samir's Home

To drop me an e-mail just

click on:

samirkharusi